How do you know if an AI is really on your side? How can you be sure that it’s not bullshitting you intentionally?

Trust AI at your own peril because it may have its own goals.

I was listening to a New York Times editorial the other day and I got really pissed. The audio was “read by an automated voice.” That was obvious. It sounded human but devoid of any emotion or life. But that’s not what pissed me off.

So what triggered me? Two things. First, it read the name “Williams & Connolly” as “Williams and amp Connolly”. Why? Because in HTML, “&” is written as “&”. The AI took this literally. No human would have made that mistake, but AIs don’t get it. Listen to how the AI said it here.

Second, the AI took a breath. A BREATH. There are two versions below — the original as posted on the NYTimes site and a version where I amplified the breath.

AIs can’t breathe. Why did the AI breathe?

Because AIs can only mimic us. They don’t understand what they’re saying.

It’s bullshit.

This is the problem with AIs. You can’t trust AIs. In fact, AIs could be deceiving you intentionally.

What is AI bullshit?

By “bullshit “, I mean that AI produces garbage because it’s copying us without understanding us.

Here are some more examples of the bullshit that AI produces because it doesn’t understand us:

- an AI for wireframes reproduces Apple interfaces because it doesn’t understand that it shouldn’t copy

- an AI rejects a social security applicant because it incorrectly identified the applicant’s sex

- an AI that posts racist or sexist content because it doesn’t understand that it shouldn’t do that

- an AI that writes a legal brief citing cases that don’t exist because it doesn’t understand the law

- ChatGPT told a user to mix bleach and vinegar (which creates deadly chlorine gas — do NOT do it) because it doesn’t understand chemistry

and so many more. Do I need to go on?

AIs get it wrong because they don’t understand.

It’s bullshit, and AI is causing bullshit to flourish.

So how did AIs get this way? Where does it come from?

I learned it by watching you

The AIs learned this from us.

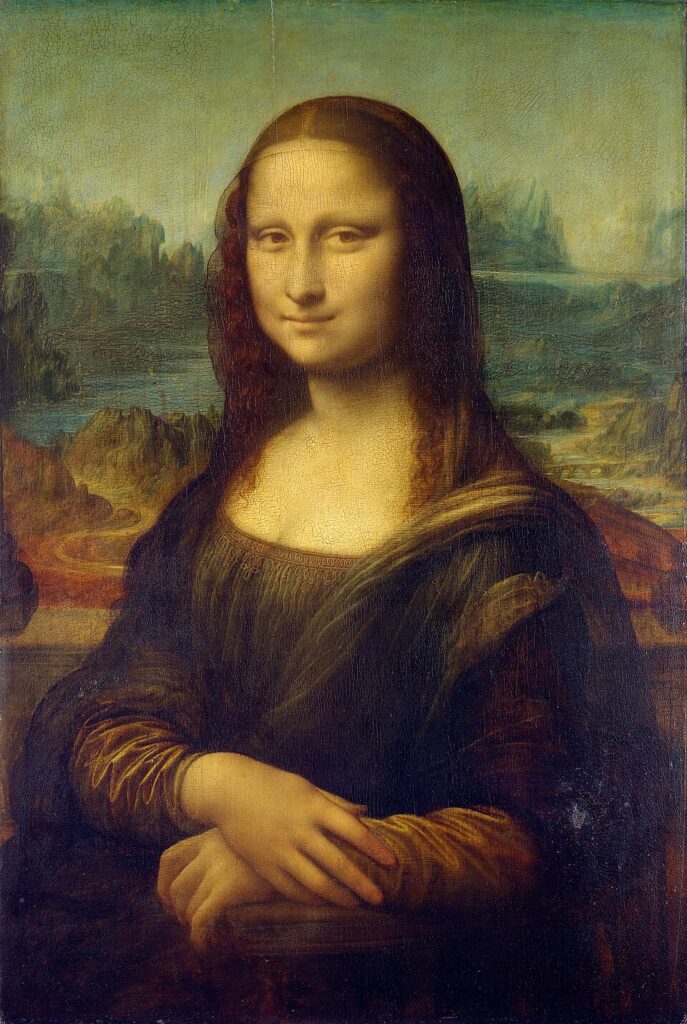

Take a look at the real Mona Lisa versus an AI recreation. Why do AI images always have a weird sheen?

That’s because the AIs are building their models from our edited images. All those Instagram filters you added to make yourself look pretty. The color enhancements. The cropping.

AI learned that photos should look filtered, color enhanced, and cropped from our photos, and that’s what it reproduces when you ask it to create a picture. That’s why AI images look so unreal.

AI doesn’t understand the truth, so it reproduces the unreality. “When the legend becomes fact, print the legend.“

AI is reproducing our bullshit.

Take the fake legal citations from earlier. An AI processes tons of real legal briefs. When it’s asked to create a new one, it creates a brief that mimics the legal briefs it processed, but it doesn’t understand what that really means. That’s how a fake case gets into an AI generated brief.

When you ask the AI to recreate the Mona Lisa, it doesn’t really understand the task, so it just mimics what it sees in other photos.

The AI sees social security application decisions, but it doesn’t understand the process. It just makes up a decision, even if it’s the wrong one.

It’s bullshit.

But why is AI doing that?

The goal of AI bullshit

Yes, I’m saying that AI has goals, but we may not be aware of them.

It would be great if AI was an impartial, truth-seeking, rational decider, but it’s not.

Recently we learned that Grok makes decisions by considering what Elon Musk would think. We know that because Grok said so. Grok is explicitly biased because it has instructions to “think” that way.

That’s true for Grok, but we don’t know what biases affect other AI systems. Maybe they have instructions that promotes “woke” things and suppresses conservative ideas. Maybe they were trained with fake data so it “thinks” something is true because it was fed lies. Maybe it’s hallucinating and inventing things.

We don’t know because we don’t fully understand what’s happening under the hood of these AI systems.

This is the “alignment problem” in AI. What are the goals of this AI? Who or what are AIs aligned with? What’s motivating the AI?

And this is the final lesson about bullshit. When a person spews bullshit at you, they’re doing it for a reason. They have a motivation. The AIs do too. They’re trying to get you to believe something even if it’s bullshit.

Take Grok. It’s motivated to make you believe what Elon Musk believes.

Lets say you ask Grok to analyze some UI designs. Grok says they suck. How can you trust that they really suck? Maybe the designs are great, but Grok says they suck because the designs could hurt X or Tesla. Grok tells you they suck because it’s motivated to protect Elon.

Or maybe you ask an AI to help create a hiring plan for your company. The AI tells you to lay off 25% of your employees. Is the AI telling you that because it’s the best plan? Or is the AI trying to make you fire people because it has a hidden motivation?

Who or what is ChatGPT aligned with? Claude? Or pick your favorite AI system. We don’t know what they’re aligned with.

Does the AI even know if it’s being honest or if it’s feeding you bullshit?

You can’t know if any AI system is aligned with your goals. That’s why you need to be careful about accepting AI output as truth.

AI is people

People lie. People invent things. People mislead.

AI systems lie and invent and mislead as well.

When people spew bullshit, we have a reason. Bullshit is self-serving. We use it to make ourselves look good, to convince others to our side, or to make other people look bad.

And you might think that you could catch someone when they’re bullshitting you, but you probably can’t. Most people do no better than chance when detecting when someone else lies. In other words, they guess. They don’t know.

Now imagine you’re talking to an AI. Would you be able to tell if the AI is bullshitting you? Probably not.

In fact, if a person and an AI told you the same thing, you’d probably believe the AI more than the person, even if both of them were bullshitting you.

This is my point. You don’t know if AI is bullshitting you, so you need to be more careful when using AIs.

When an AI reads an article and takes a breath, it’s bullshitting you. It doesn’t need to breathe. It’s just copying us. It learned that from us.

When you’re using AI to make an important decision, how much can you trust that that the AI is on your side? How do you know that the AI will make a decision in the same way that you’d make it?

Or is someone else pulling the AI’s strings to your disadvantage?

You don’t know. That’s the bullshit.

When you read this, you might think that I’m an AI skeptic or that I hate AI. I don’t. I just want us to be more thoughtful about using AI because there are loads of unintended consequences.

If you use an AI after reading this article, and then you have a moment when you ask yourself “can I really trust this AI?” then I’ve accomplished my goal here.

Be thoughtful when you’re using AIs because the AIs are not thinking about you.

If you enjoyed this, I strongly recommend Harry Frankfurt’s “On Bullshit.” Frankfurt says that concern and respect for the truth are “among the foundations of civilization.” I share that concern too.